A while back I wrote a Twitter thread about the next frontier of Web3 Fashion.

The three technologies I see pushing the envelope of Web3 Fashion are:

AI

Generative engine

Oracles

The first part of this series focuses on the possibilities that AI introduces to Web3 Fashion. An entire article could be written on AI’s implications for traditional fashion processes, such as:

However, I’ll specifically focus on the question: How can AI impact Fashion NFTs?

Art

Before we get into the possibilities, it’s helpful to dissect an NFT into its components. There are multiple ways to categorize the NFT stack, but I find it helpful to categorize it into 1) Art, 2) Utility and 3) Smart Contract.

The most obvious way AI can influence Fashion NFTs is through art creation (including the design process). We’ve already seen some innovative AI art implementations in Web3:

The Fabricant used an AI model that uses Paris Fashion Week runway looks as inputs for design inspiration

RTFKT created an AI painting with MGXS that dynamically changed as the bids came in

Refik Anadol utilized AI to morph 10 million photos of New York into pieces of art

Some Specific Ways Brands Can Utilize AI for Their Art:

Neural Style Transfer — Remixes and Collaborations

Neural style transfer (NST), essentially takes style A and projects it onto style B. This is what Levi’s did with its “Starry Night” trucker jacket. They used a neural network whose inputs included Van Gogh’s Starry Night painting.

The style transfer is perfectly suited not only for such historical reinterpretations but for brand collaborations as well. It can act as the starting point for designers and come up with unique designs in seconds. As an example, below, I used neural style transfer to transfer the famous Louis Vuitton pattern onto Nike Air Force 1s, with just a few clicks. You can imagine the interesting results we could get with a bit of tweaking of the AI model.

Image Synthesis — Train AI On Your Own Data

StyleGAN image synthesis is another interesting opportunity for projects to experiment with. It allows brands to train the AI model with custom inputs and receive synthesized images as outputs.

Ideally, brands would train the AI on meaningful input e.g. images of the city they were created in, their mood board or their core message. Think of it as DALL·E that is trained on your own images instead of the internet’s vast repository.

A good example of this outside the realm of Web3 Fashion is the NFT project Synthetic Dreams by Refik Anadol. It consists of imaginary AI-made landscapes that use 200 million raw images of landscapes around the world as input data.

There are multiple interesting AI-enabled tools that can be used in the fashion design process:

This Sneaker Does Not Exist contains free imaginary shoes created by AI (VQGAN and CLIP). There are even a few parameters you can tweak.

ClothingGAN allows users to synthesize completely new clothes from scratch.

The AI programming software Chimera “is a first-of-its-kind VAE-GAN [a type of AI algorithm] that has been trained to design clothing for brands and individuals, outputting an edited 3D model and the 2D flat patterns to sew the garment”. It works on mood board images and text inputs.

3D Assets

While AI-generated art can be applied to digital and physical clothing designs, an important Web3 Fashion narrative is digital fashion in the metaverse. These are essentially 3D file formats instead of JPEGs.

AI is quickly innovating the 3D asset space as well. For instance, Google DreamFusion (still closed to the public) generates 3D assets from text. While the technology is still nascent, if we draw from DALL·E’s incredibly fast progress it’s not inconceivable that such 3D technologies become available to the public relatively soon.

Other companies that are pushing the 3D asset space are NVIDIA and LumaAI. They both allow users to turn 2D pictures into 3D scenes and assets in minutes.

In addition to unique digital assets, entire game environments have been created without 3D artists. Avatar Runner, a game by Anything World and Ready Player Me was “built in less than 2 weeks with no 3D artist thanks to Anything World” .

Overall, this will make the creation of 3D NFTs and metaverse easier than ever. One potential consequence is the democratization of Decentraland and Roblox user-generated content (UGC) as well as easier 3D asset generation for companies with existing intellectual property (IP).

Utility

While the utility narrative has generally received a bad rap in the NFT community, there are interesting potential AI-specific utility use cases.

NFT = Trained ML/AI model

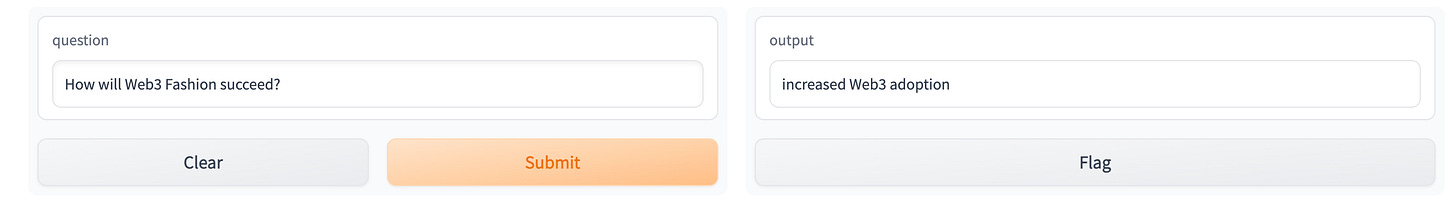

While the most popular AI tools generally use freemium models, there could be room for proprietary trained/curated models (e.g. question answering, image or audio generation). As a proof of concept, I trained an existing Natural Language Processing (NLP) model using my previous Substack posts. The answers to the questions were decent even with such a limited dataset (see pics below).

ASM Brains is a great example of an NFT project that incorporates utility-based AI models. The project allows you to train your “brain” i.e. the NFT via Machine Learning (AI) training which could then be applied to AIFA (Altered State Machine play and earn game), trading bots, music, etc.

NFT = Proprietary dataset that can be plugged into an AI model

What if there was a curated set of inputs that would teach an AI model to output something of value? The output could be anything from appealing images to niche answers.

NFT = Train-to-Earn AI companion

An interesting non-NFT project that contains some lessons for the NFT community is Replika. At its core it’s an AI companion (chatbot), however, it can be upgraded/personalized via gems. Gems can be attained by chatting with the AI companion or by purchasing them (see pic below). Interestingly, the more you talk to Replika the smarter it becomes. Could this be the birth of a train-to-earn model?

Gems allow you to customize the outfit and appearance of the AI companion. Not only is this great from the user perspective, but this would also create a natural fit for mid to higher-end digital fashion studios like The Fabricant.

Replika is currently used for companionship, note-taking, coaching and diary logs among other purposes. Because the more you train the AI companion the more it becomes like its “human”, this creates interesting opportunities for imparting your knowledge or personality to the AI companion. Maybe one day one could talk with the previous generations vicariously via their AI companions.

Because of the inherent values of Web3, it would offer unique advantages, for a Replika-type implementation: transparency would alleviate centralized data concerns, and ownership would allow you to sell your knowledge.

Smart Contracts

Code: Open AI Codex is pushing the envelope of coding in simple English. It currently supports popular languages like Python, but it’s not inconceivable that Solidity support will be added at some point.

Metadata: Can act as an important input into the AI model. While metadata often describes the visual attributes of an NFT and could describe the inspiration for AI image generation (e.g. skateparks in LA) it can be applied in more interesting alternative ways as well. Some relevant attributes could represent the sophistication of an AI model (e.g. OpenAI does this by categorizing its models into four different levels from DaVinci to Ada), available computing power, the purpose of the model (ASM Brains groups AI functionality based on the NFTs’ DNA). Overall, Metadata is a good way to 1) differentiate NFT collection assets and 2) create unique composability across different collections. I’ll discuss metadata more in-depth in Part 3 of this Future of Web3 Fashion series.

Other AI Web3 Use Cases

Airdrop engine — choose what to and to whom based on wallet activity

Recommendation engine — because you liked this NFT, you’ll probably like that one as well

Metaverse shopping:

Meta is trying to make shopping easier for everyone via AI personalization and recommendation tools. Undoubtedly this would not only apply to Meta’s current marketplaces but also to future metaverse marketplaces. The number of assets in a busy metaverse/game will be enormous. To highlight relevant items and personalize experiences, AI will have to be implemented. Things like metaverse concerts, clubs, and games could be used as inputs.

Metaverse environment customization — environment changes based on who the user is

AI stylists — AI learns your preferences and pairs clothing items to save you time and effort

NEXT STEPS:

Feel free to DM me on Twitter @nakedcollector if you have an interesting Web3 idea or project.

Thanks for reading!